AI tools have emerged since my first blog post about how I write documentation, prompting me to rethink how I draft, review, and refine content.

Much of the conversation around AI in documentation focuses on efficiency, with AI agents generating outputs that humans revise. I prefer to use AI as an interactive collaborator to:

- Preserve my creativity, judgment, and ownership.

- Keep humans at the core of the documentation process.

In this post, I describe how I collaborate with AI assistants to draft documentation in a domain where clarity, accuracy, and audience trust matter, using the concrete example of release notes that announce my development teams’ new features and enhancements.

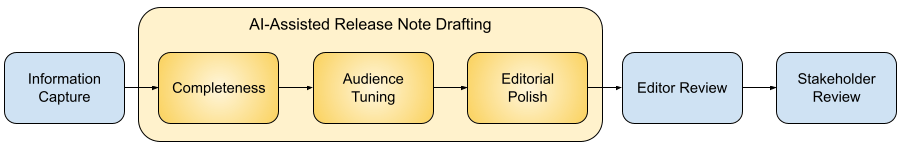

My AI-Assisted Release Note Drafting Process

I always create the first raw release notes draft manually by:

- Systematically reviewing available documentation for new features and enhancements.

- Verifying my understanding through testing and discussions with development teams.

It’s not the output that matters at this stage, but the cognitive process. It enables me to:

- Develop a deep understanding of the changes that my teams deliver.

- Critically assess any output generated by AI.

Then, I collaborate with AI assistants to:

- Ensure the completeness of the release notes.

- Tailor drafts to the target audience.

- Polish the language and readability.

At the end of the drafting process, I have a strong first draft for editor and stakeholder review.

Meet my Assistants

I’ve curated a set of AI assistants that each have a specific role to help me elevate my release notes drafts.

Release Notes Generation Assistant

| Goal | Help me complete a first release note draft that includes all required information. |

| Role | Coverage and completeness check. |

| Task | Create a release note draft from Jira epics and stories, which capture development work and feature details. |

| Persona | A technical writer who produces release notes for new features and enhancements. |

| When I use it | After I create my initial manual draft. |

| Notes | I combine my manual draft with the AI-generated draft, applying critical judgment and fact-checking to produce a comprehensive and accurate first complete draft. |

Audience Review Assistant

| Goal | Help me improve clarity, usability, and audience relevance. |

| Role | User Advocate. |

| Task | Ask clarifying questions from the perspective of a reader, helping anticipate questions and concerns. |

| Persona | The target audience for my release notes: a user trying to understand what we’re delivering, the impact on their current workflows, and whether they want to use the new feature or enhancement. |

| When I use it | Once I have a complete first draft that includes all required information. |

| Notes | I engage in a conversational review loop with this assistant, incorporating its questions and concerns to refine explanations, anticipate user questions, and improve overall clarity and usability. |

Editorial Assistant

| Goal | Help me deliver a polished, style-compliant release note draft. |

| Role | Editorial quality and style compliance. |

| Task | Highlight deviations from company voice and style guide and suggest improvements. |

| Persona | An editor who ensures style guide adherence, grammar, and spelling accuracy. |

| When I use it | When I have a complete draft that I’ve tailored to the target audience. |

| Notes | I collaborate with the assistant in a review loop where it identifies stylistic inconsistencies, grammar issues, typos, and style guide deviations. I apply editorial improvements while maintaining accuracy and intent. |

How AI Improves my Release Notes

Overall, AI assistants help me produce higher-quality release notes, while keeping me in full control.

They help me ensure the content is clear, complete, and accurate before it reaches product managers. This helps reduce review cycles and back-and-forth with stakeholders.

They also provide useful examples and phrasing, helping me brainstorm how to communicate complex concepts or steps.

My favorite AI assistant is the Audience Review Assistant. It identifies statements that could confuse or alarm users, enabling me to reword drafts to reduce the risk of misinterpretation or unnecessary concern. It also asks thoughtful questions that prompt me to add information I might not have considered. Examples of updates prompted by the Audience Review Assistant:

- Clarified that an enhancement doesn’t change existing configurations, reducing the risk of alarm.

- Specified the length of a new instructional text field, helping users prepare instructions before the feature is delivered.

Lessons Learned

Experimenting with AI assistants has taught me that:

- Manual drafting is essential: it engages my critical thinking and enables me to fact-check AI-generated output.

- Human interaction is irreplaceable: editors and product managers provide insights, context, and real-world examples that AI can’t replicate.

Conclusion

Using AI tools as interactive collaborators rather than time-saving tools increases my job satisfaction and the quality of my documentation. In technical writing, AI is most effective when it supports human expertise and collaboration, not when it attempts to replace the critical thinking and insights that come from working with people.

And while I’m not delivering release notes faster, I’m ultimately saving time for editors, customer support teams, and product managers by reducing:

- Review cycles.

- Customer questions.

This approach reflects how I work as a technical writer: deeply understanding the subject, collaborating with humans and tools thoughtfully, and prioritizing user trust over speed.